Getting your website listed in search engine results is the foundation of online visibility and organic traffic success. Without proper listing in search engine databases, even the most expertly designed website remains invisible to potential customers searching for your products or services. Understanding how search engines discover, process, and display your web pages—and how they store your website’s content for retrieval in search results—is crucial for any business looking to establish a strong online presence.

Search engine listing involves a systematic process where search engines like Google, Bing, and Yahoo crawl your website, index your content, and determine where your pages should appear in search results. This process isn’t automatic or instant—it requires strategic planning, proper implementation, and ongoing optimization to ensure your site gets discovered and ranked appropriately.

In this comprehensive guide, you’ll learn the essential steps to get your website properly listed in search engines, from initial submission through ongoing performance monitoring. We’ll cover the technical requirements, optimization strategies, and best practices that help search engines understand and value your content, including the importance of providing specific details such as descriptions, images, and location details to improve your site’s visibility and ranking, ultimately driving more organic traffic to your site.

Key Takeaways

- Search engine listing involves getting your website crawled, indexed, and ranked by search engines like Google, Bing, and Yahoo

- Submit sitemaps through Google Search Console and Bing Webmaster Tools to accelerate the listing process

- Optimize your site structure, content, and technical elements to improve search engine visibility

- Use proper URL structure, meta tags, and descriptive content to help search engines understand your pages

- Most websites get discovered automatically through links, but manual submission speeds up the process

- Focus on Google (90%+ market share) and Bing (which also covers Yahoo) for maximum impact

- Ensure your business information includes a consistent phone number across all listings to improve local SEO and build search engine trust

Introduction to Search Engines

Search engines are powerful answer machines that help users discover, understand, and organize the vast amount of content available on the internet. Their primary goal is to deliver the most relevant search results for every query, ensuring users quickly find the information, products, or services they need. Major search engines like Google, Bing, and Yahoo use advanced algorithms to scan the web, evaluate website content, and determine which pages best match a user’s search intent. For website owners and businesses, understanding how search engines operate is essential for improving visibility and ensuring your website appears in front of the right audience. By optimizing your site for search, you increase the chances that your web pages will be discovered and ranked highly in search results, driving more users to your site.

How Search Engines Work

Search engines operate through a three-step process: crawling, indexing, and ranking. Crawling is the first step, where search engines deploy automated bots—often called crawlers or spiders—to discover new and updated content across the web. Once a web page is found, the search engine moves to indexing, which involves storing and organizing the page’s content in a massive database known as the index. This index allows the search engine to quickly retrieve relevant information when a user submits a search query. The final step is ranking, where the search engine evaluates hundreds of ranking signals—such as content quality, page speed, and user engagement—to determine the order in which results appear. For example, Google’s algorithm considers over 200 ranking signals to ensure users receive the most relevant and useful search results for their queries. Understanding this process helps website owners optimize their sites to improve their chances of appearing prominently in search engine results.

Understanding the Search Engine Listing Process

The search engine listing process is how your website’s pages become discoverable in search engine results. It starts with crawling, where search engines like Google use automated crawlers to scan the web for new content and updates. Once your web pages are crawled, they are indexed—meaning the content is stored in the search engine’s vast database, ready to be retrieved for relevant queries. The final step is ranking, where indexed pages are evaluated and ordered based on their relevance to a user’s search. Website owners can influence this process by optimizing their site’s structure, ensuring new content is easily accessible, and using effective meta tags. By making your website easy for search engines to crawl and understand, you increase the likelihood that your pages will be indexed and ranked for the right search queries, helping more users discover your content in search engine results.

Understanding Search Engine Listing Process

Search engines discover websites through three main processes: crawling, indexing, and ranking. This systematic approach ensures that when users search for information, products, or services, they receive the most relevant and authoritative results possible.

Crawling involves search engine bots following links to discover new and updated content across the web. Google crawls billions of web pages daily, using sophisticated algorithms to determine which pages to visit and how frequently to return. Google crawls web pages at different frequencies depending on factors like the site’s popularity, content update rate, and site structure. These automated programs, often called spiders or crawlers, start with known pages and follow links to discover new content, creating a vast network of interconnected information.

Indexing stores discovered content in search engine databases for retrieval in results, which are collectively known as Google’s index. During this phase, search engines analyze a page’s content, extract keywords and topics, understand page structure, and determine the relationships between different pages on your site. The indexing process helps search engines quickly retrieve relevant pages when users submit search queries.

Ranking determines the order of search results based on relevance and authority signals. Search engines evaluate hundreds of ranking signals, including content quality, user experience metrics, mobile-friendliness, page speed, and external link authority. Your website must be accessible to crawlers to appear in search engine results pages (SERPs), making essential SEO principles and technical optimization a critical foundation for success.

The vast majority of organic traffic comes from the first page of search results, with studies showing that over 75% of users never scroll past the first page. This makes understanding and optimizing for the listing process essential for driving meaningful traffic to your website.

How to Submit Your Website to Major Search Engines

While search engines can discover most websites through natural crawling and linking, manual submission significantly accelerates the process and ensures comprehensive coverage of your site’s important pages.

Set up Google Search Console and verify ownership through DNS TXT record verification or other available methods. This process involves adding a TXT record to your domain’s DNS settings to confirm that you own the domain. Google Search Console provides essential tools for monitoring your site’s presence in google search results and identifying potential issues that could impact visibility. The platform offers detailed insights into how Google crawls and indexes your site, making it an indispensable resource for website owners.

Submit XML sitemaps through Google Search Console under Index > Sitemaps section. An xml sitemap acts as a roadmap for search engines, listing all the pages on your site and providing metadata about each page’s importance and update frequency. When you submit sitemaps, you’re essentially telling search engines about new pages and content updates, helping them discover and index your content more efficiently.

Configure Bing Webmaster Tools and import sites from Google Search Console for efficiency. Since Bing powers both Bing and Yahoo search results, submitting to Bing Webmaster Tools effectively covers multiple search engines with minimal additional effort. The import feature allows you to transfer your Google Search Console data, streamlining the setup process.

Submit sitemaps to Bing Webmaster Tools to cover both Bing and Yahoo search engines. While Google dominates with over 90% market share, Bing still represents a significant portion of search traffic, particularly in certain demographics and geographic regions. Yahoo search results are powered by Bing’s index, so submission to Bing automatically covers Yahoo as well.

Use URL Inspection tool in Search Console to submit individual pages for faster indexing. This tool allows you to request indexing for specific pages, particularly useful for new content, updated pages, or time-sensitive information. The URL Inspection tool also provides detailed information about how Google sees and processes individual pages on your site.

DuckDuckGo automatically indexes content from Bing, requiring no separate submission. Other search engines like DuckDuckGo source their results from existing indexes, so focusing your submission efforts on Google and Bing provides comprehensive coverage across the search landscape.

Using Google Search Console

Google Search Console is a free, essential tool for website owners who want to monitor and improve their site’s performance in Google Search results. With Search Console, you gain valuable insights into how Google crawls, indexes, and ranks your website, allowing you to identify and fix technical issues that could impact your visibility. The platform enables you to submit sitemaps, request indexing for new or updated pages, and access detailed reports on search traffic, impressions, and click-through rates. By verifying your website in Google Search Console, you can track which queries bring users to your site, see how your pages appear in search results, and refine your SEO strategies for better results. Regular use of Search Console helps ensure your website remains accessible to Google, supports ongoing SEO efforts, and maximizes your site’s potential to attract free, organic traffic from Google Search.

Optimizing Your Website for Search Engine Discovery

Creating a website structure that search engines can easily crawl and understand forms the foundation of successful listing in search engine results. Your site’s architecture directly impacts how efficiently search engines can discover and index your content.

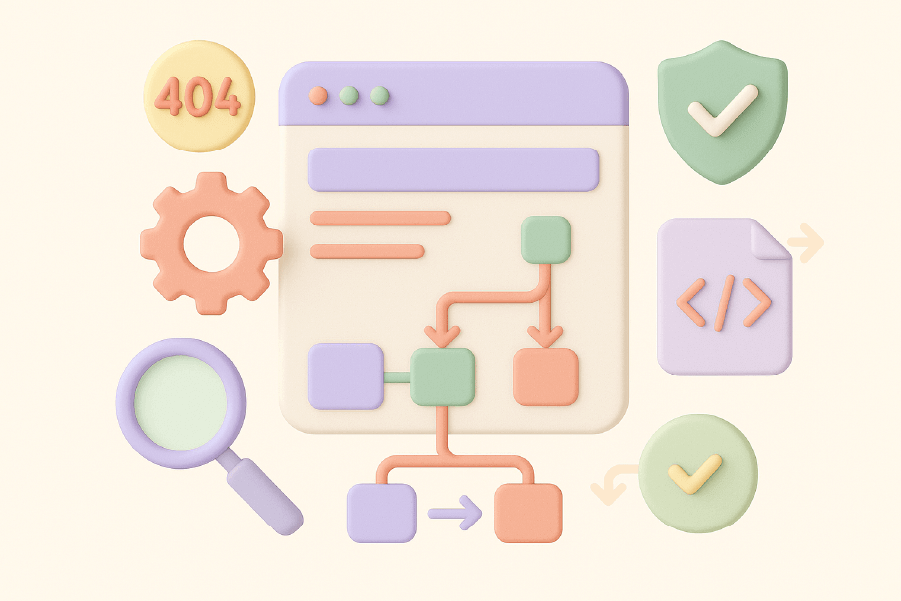

Create clean, logical site architecture with descriptive URLs and directory structures. Search engines favor websites with clear hierarchical organization, where users and crawlers can easily navigate from the homepage to any given page within a few clicks. Descriptive URLs that include relevant keywords help both users and search engines understand page content before visiting.

Implement proper robots.txt files to guide search engine crawlers effectively. This file tells search engines which parts of your site to crawl and which to avoid, helping you manage crawl budget and prevent indexing of sensitive or duplicate content. A well-configured robots.txt file ensures crawlers focus on your most important pages.

Use meta tags including title tags, meta descriptions, and robots directives appropriately. Title tags serve as headlines in search results and significantly impact click-through rates, while meta descriptions provide brief summaries that encourage users to visit your site. These elements help search engines understand page content and improve your site’s appearance in search results.

Ensure all important pages are linked internally and discoverable through site navigation. Internal linking distributes authority throughout your site and helps search engines understand the relationship between different pages. Using descriptive anchor text in your internal links is important for both users and search engines, as it provides context about the linked page and can influence how search engines interpret the relevance of your content. Pages that aren’t linked from other pages (orphan pages) may never be discovered by search engine crawlers.

Sites linking to your website from external sources also help search engines discover and index your content. Backlinks from other sites act as pathways for search engines to find your pages, and submitting a sitemap can further assist with indexing if there are few or no external sites linking to you.

Optimize crawl budget by blocking unimportant pages like duplicates and staging environments. Search engines allocate limited crawling resources to each site, so directing crawlers toward your most valuable content improves indexing efficiency. Block access to administrative pages, duplicate content, and low-value pages that don’t contribute to your search visibility goals.

Create custom 404 pages and implement proper redirect strategies to maintain link equity. When pages are moved or deleted, proper redirects ensure that both users and search engines are directed to relevant alternative content, preserving the value of existing links and maintaining positive user experience.

Technical Requirements for Listing Success

Ensure search engines can access CSS, JavaScript, and other resources needed to render pages properly. Modern search engines execute JavaScript and apply CSS styles to understand how pages appear to users, making resource accessibility crucial for accurate indexing and ranking evaluation.

Avoid blocking crawlers from accessing critical site functionality through robots.txt restrictions. Overly restrictive robots.txt files can prevent search engines from fully understanding your site’s content and functionality, potentially impacting your search visibility.

Use structured data markup to help search engines understand your content better. Schema.org markup provides additional context about your content, enabling rich results like product cards, reviews, and business information in search results. Using structured data can help Google better understand and display your pages in search results. This enhanced presentation can significantly improve click-through rates from search results.

Implement proper canonical tags to prevent duplicate content issues that can confuse search engines about which version of similar content to index. Canonical tags help consolidate ranking signals for similar or identical content, ensuring search engines focus on your preferred version.

Monitor server errors and crawl issues through Google Search Console reports. Regular monitoring helps identify technical problems that could prevent proper crawling and indexing of your content. Server errors, blocked resources, and other technical issues can significantly impact your search engine visibility.

Image Optimization for Search Engine Listing

Optimizing images is a key part of search engine optimization (SEO) that can significantly boost your website’s visibility in search engine results, including Google Images and local search results. To ensure your images contribute to your SEO efforts, use high quality images with descriptive alt text that accurately describes the content of each image. This not only helps search engines understand your images but also improves accessibility for users with visual impairments. Additionally, optimize image file names with relevant keywords and compress images to reduce file size, which enhances page load speed and user experience. Google Images uses sophisticated algorithms to rank images based on relevance, quality, and user engagement, so well-optimized images are more likely to appear in search engine results and attract potential customers. By focusing on image optimization, businesses can increase their chances of being discovered by users searching for products, services, or information, driving more targeted traffic to their website.

Content Optimization for Better Listing Performance

High-quality, relevant content forms the cornerstone of successful search engine listing and ranking performance. Search engines prioritize content that provides value to users and answers their questions comprehensively.

Create unique, valuable content that ensures user questions are answered directly and comprehensively. Focus on providing comprehensive information that addresses the specific needs and questions of your target audience. Sometimes, providing a short answer to common questions can improve clarity and user satisfaction. Search engines reward content that demonstrates expertise, authority, and trustworthiness in its subject matter.

Use descriptive, keyword-rich titles and headings that accurately describe page content without resorting to keyword stuffing. Titles should be compelling enough to encourage clicks from search results while clearly communicating the page’s topic and value proposition.

Write compelling meta descriptions that encourage clicks from search results. While meta descriptions don’t directly impact rankings, they significantly influence click-through rates from search results. The way your page appears as a search result—including the title, URL, and snippet—can impact user decisions. A well-written meta description acts as advertising copy, convincing users that your page contains the information they seek.

Include relevant keywords naturally throughout your content without keyword stuffing. Focus on creating content that flows naturally while incorporating terms and phrases your target audience uses when searching for information related to your topic. Review your content regularly to remove or update keywords and topics that are not relevant anymore.

Add high quality images with descriptive alt text to improve accessibility and SEO. Images enhance user experience and can appear in google images search results, providing additional traffic opportunities. Add descriptive alt text that helps search engines understand image content and improves accessibility for users with visual impairments. If your site features videos, optimize them for search engines as well to improve visibility in search results.

Link to relevant internal and external resources to provide additional value and context. Internal links help users discover related content on your site while distributing authority throughout your site structure. External links to authoritative sources can enhance your content’s credibility and provide additional value to users. Earning links from other websites is also crucial for improving your site’s authority and search engine rankings.

Monitoring and Maintaining Your Search Engine Presence

Successful listing in search engine results requires ongoing monitoring and optimization. Regular assessment of your site’s search performance helps identify opportunities for improvement and potential issues that could impact visibility.

Use “site:yourdomain.com” search operators to check how many pages indexed are currently in search engine databases. This simple search query provides a quick overview of your site’s indexing status, though it’s not perfectly accurate for detailed analysis.

Monitor Index Coverage reports in Google Search Console for crawl and indexing issues. These reports identify pages that couldn’t be indexed due to technical problems, helping you address issues that prevent proper listing in search engine results.

Track search performance metrics including impressions, clicks, and average position for your target keywords. Search Console provides detailed performance data showing how your pages appear in search results and which queries drive traffic to your site. Additionally, monitor your google rankings for target keywords to assess progress and understand how engagement metrics may correlate with higher rankings.

Submit updated sitemaps when adding new content or making significant site changes. Regular sitemap updates help search engines discover new pages and content modifications more quickly, ensuring your latest content gets indexed promptly.

Request indexing of updated pages through URL Inspection tool when needed. For time-sensitive content or important page updates, manual indexing requests can accelerate the process of getting new content included in search results.

Regular monitoring helps identify and fix issues that could impact search visibility. Review how your site appears on the search engine results page to optimize for better visibility and click-through rates. Avoid services that try to sell unnecessary submission or optimization tactics—focus on quality content and proper website optimization instead. Proactive monitoring allows you to address problems before they significantly impact your search performance, maintaining consistent visibility in search results.

Local Business Listing Considerations

Local businesses require additional listing strategies to appear in local search results and google maps. Local search optimization involves specific tactics beyond general search engine listing.

Claim and optimize Google My Business listing for local search visibility. Google My Business is crucial for appearing in local results and google maps, providing essential business information directly in search results.

Ensure consistent NAP (Name, Address, Phone) information across all listings and directories. Consistent business information across the web helps search engines verify your business legitimacy and improves local ranking signals.

Submit to relevant local directories and industry-specific listing sites. Local directories provide additional visibility and can drive direct traffic while supporting your overall local search strategy.

Optimize for local keywords and location-based search queries. Include location-specific terms in your content and metadata to help customers find your business when searching for local products or services.

Encourage customer reviews to improve local search prominence. Positive reviews on Google My Business and other platforms enhance your local search visibility and provide social proof for potential customers.

Common Listing Mistakes to Avoid

Understanding common pitfalls in search engine listing helps prevent issues that could delay or prevent proper indexing of your website.

Avoid paid submission services that promise instant search engine listing for fees. Legitimate search engines provide free submission through their webmaster tools, making paid services unnecessary and potentially harmful.

Don’t block search engines accidentally through robots.txt or meta noindex tags. Incorrect configuration of these directives can prevent search engines from crawling and indexing your most important content.

Prevent duplicate content issues that can confuse search engines about canonical versions of your content. Implement proper canonical tags and avoid publishing the same content across multiple URLs without proper consolidation signals.

Avoid using generic or auto-generated titles and descriptions that don’t describe content accurately. Each page should have unique, descriptive metadata that helps both users and search engines understand the page’s content and purpose.

Don’t submit the same sitemap multiple times or include blocked URLs in sitemaps. Clean, accurate sitemaps help search engines efficiently crawl your site, while poorly maintained sitemaps can waste crawl budget.

Avoid redirect chains that can slow down crawling and waste crawl budget. Direct redirects from old URLs to new destinations preserve link equity more effectively than multiple redirect hops.

Advanced Listing Strategies

For complex websites and competitive markets, advanced strategies can improve search engine listing success and overall visibility.

Use URL parameters settings in Google Search Console to handle e-commerce filtering and faceted navigation. These settings help search engines understand which URL parameters create duplicate content and which provide unique value.

Implement hreflang tags for international sites with multiple language versions. These tags help search engines serve the appropriate language and regional version of your content to users in different locations.

Optimize for featured snippets by structuring content to answer common questions clearly and concisely. Featured snippets appear at the top of search results and can significantly increase visibility and traffic.

Submit video sitemaps for sites with significant video content. Video sitemaps help search engines discover and index multimedia content, making it eligible for video search results and enhanced SERP features.

Use Google Search Console’s URL removal tool to quickly remove outdated content from search results. This tool provides immediate removal of sensitive or obsolete content while you work on permanent solutions.

Monitor and optimize Core Web Vitals for better user experience and ranking signals. Page speed, interactivity, and visual stability increasingly influence search rankings and user satisfaction.

Use structured data for rich results, such as product cards, recipes, or FAQs. Enhanced SERP appearances can improve click-through rates and provide more valuable information to users directly in search results.

FAQ

How long does it take for a website to get listed in search engines?

Typically 1-4 weeks for new sites, though established sites with good link profiles may be indexed within hours or days. Factors like site authority, content quality, and technical optimization significantly impact indexing speed.

Do I need to submit my website to every search engine individually?

Focus on Google and Bing, as they cover 95%+ of search traffic. Bing submission also covers Yahoo automatically, while other search engines often source results from these major indexes.

Can I pay to get my website listed faster in search engines?

No, legitimate search engines offer free listing through their webmaster tools. Paid submission services are unnecessary and often scams that don’t provide legitimate value.

What should I do if my website isn’t appearing in search results?

Check for technical issues blocking crawlers, submit sitemaps through search console, ensure content quality meets search engine guidelines, and verify there are no blocking directives preventing indexing.

How often should I submit updated sitemaps?

Submit new sitemaps when adding significant new content or making major site changes. Minor updates are usually discovered automatically through regular crawling, but sitemap updates can accelerate the process.

Is it necessary to submit individual pages to search engines?

Generally no, as search engines discover most pages through natural crawling and internal linking. However, you can use URL inspection tools to expedite indexing of important new or updated pages when immediate visibility is crucial.

Search engine listing success requires patience, technical attention to detail, and ongoing optimization efforts. By following these fundamental practices and maintaining consistent monitoring, you’ll establish a strong foundation for search visibility that supports long-term organic traffic growth. Start with the basics—set up search console accounts, submit your sitemaps, and ensure your site meets technical requirements—then build toward more advanced optimization strategies as your understanding and needs evolve.